Panel: Don't duck up the Numbers: Where AI Hype Meets BI Reality

Speakers

Barr Moses is the CEO and Co-founder of Monte Carlo, the lead of the data observability category backed by Accel, GGV, Redpoint, ICONIQ Growth, Salesforce Ventures, IVP, and other top Silicon Valley investors.Monte Carlo works with companies such as Cisco, American Airlines, and NASDAQ to help them drive positive business outcomes with reliable data and AI.

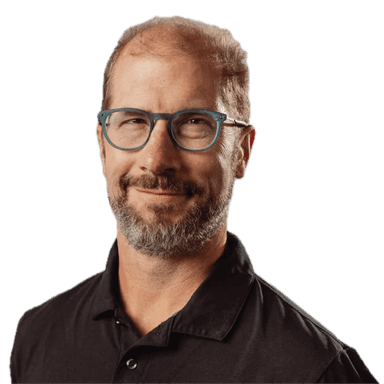

Barry McCardel is the CEO and co-founder of Hex.He had previously worked at TrialSpark as a Senior Director, Operations & Strategy and at Palantir Technologies where he led teams at the intersection of product development and real-world impact.

Colin Zima is the CEO of Omni. Previously, Colin served as the Chief Analytics Officer and VP of Strategy at Looker, and a Director of Product at Google Cloud.During his time at Looker, Colin led product, analytics, customer success, support, and strategy. Before Looker, Colin served as the Director of Data Science and Business Intelligence at HotelTonight, which he joined through the acquisition of his startup, PrimaTable. Prior to founding PrimaTable, Colin spent several years as a statistician at Google in search ranking and as a synthetic credit structurer at UBS.

Prior to founding MotherDuck, Jordan was at SingleStore where he was Chief Product Officer.Previously, he was a product leader, engineering leader, and founding engineer on Google BigQuery.

Tristan Handy is the Founder and CEO of dbt Labs. dbt is an emerging leader in the data transformation space. It's used by 60,000 companies including JetBlue, HubSpot, Dunelm, and SunRun.Tristan has over two decades of experience as a data practitioner working in both large enterprises and startups. His expertise and data industry best practices have influenced thousands of subscribers and listeners weekly via his newsletter and podcast covering analytics engineering.

0:00[music]

0:05[music]

0:10So, uh, thrilled to have have you guys all all up here. Um, all at once. Um, when we kind of saw who was like the names of people that were that were like, "Oh, wow. We've got uh we've got Tristan. Oh, wow. Bar bar is gonna is going to come." I figured Omni Omni is a close partner and that they would they

0:28would join and then Barry [laughter] to get >> and then Barry >> then Barry is good to be up here with fellow Titans.

0:35>> Are you saying that you expected fewer of us to join and so you had to find more chairs?

0:38>> Oh well yeah um we needed a you know to spread them out a little bit. That's why the chairs are pretty pretty large. But um anyway uh thanks thanks everybody.

0:47We're going to talk uh today about uh about AI and BI. Um, I was trying to figure out a way to add like CI, you know, continuous integration. And then DI, what's DI data integration?

1:00>> Data integration. EI, I got stuck at at E because I'm not sure what what that would be, but um well, at least we'll start with AI and the and the BI uh part of things. So, everybody knows kind of AI is changing how like how people are building software. Um but also I think as being people in the data business um

1:20it's not changing how data people are doing things as as fast and um just wondering uh maybe I'll start with you Colin um you know why why is it that uh that uh that things are that the data

1:36you know life as a data person is not changing the way it is for a software person.

1:39>> Yeah I think there's two main reasons. So first I think it's it is starting to change. So like I I I do think that it's shifting a little bit but slowly. It is happening more slowly than other things.

1:49I think when you think about the use cases that natural language is really good at right now, it is use cases where the user can interact with the result set in a really clear way. So editing uh like code there places where it supplements what the user is doing instead of completely replacing what the user is doing. And one of the challenges

2:09in doing this for data um is I think that you really need a lot of UI attached to a lot of the things that natural language is doing to be able to introspect results and debug things. And so it is very deeply integrated with the application layer. I think when done well which just means that you know like

2:30there are a lot of these young companies that just drop a text box in and are like maybe this is your BI tool now. But I think the better use cases actually require building in a much more cohesive way to the rest of the app. You know, semantic layers, all of these other things that you actually need to go

2:45implement and do those things. Well, >> uh Tristan, I know you like you you bought a semantic layer company. I wondering what your uh your thoughts are on um on semantic semantic modeling layers as the as the solution for AI based BI.

3:01So, uh, I had a great conversation with a gentleman named Jay Sobel So, soil at RAMP, um, while at Coales this year and,

3:14um, we started off completely disagreeing with each other on this topic. Uh, he said you don't need semantic layers to do good um, interactive question and answer uh, with AI. Um, and I started off with the maximalist position of like, how could you possibly not like none of your answers are going to be correct? And we got into it and what what we found out

3:41was that it's all about the query patterns. So at RAMP and and Jay's written about this publicly uh they have a very successful uh tool that they built internally where uh people go to a slack channel they ask questions that they get answers they measure the the usage of this thing and it's like awesome up and to the right like exactly

3:59what you want which it seems like more of us should be experiencing that but like actually it's it's not happening quite as quickly or as broadly as you would want. Um and the the difference in

4:12fact patterns uh for the different queries turns out to be uh a lot of people just want a really straightforward answer. They don't want to know how revenue trends over time or like how cost of goods sold is like they they want like uh what was the sales rep on this account? uh like a lot of it is

4:30actually like very straightforward lookup stuff and actually if you can provide all the right context and there's a lot more to say about that but if you can pro provide all the right context on like single table queries or maybe single join uh queries uh you can actually do a great job there but they actually at ramp they have not open it

4:51up to the CFO org uh because that's the fact pattern that like really needs a semantic layer so anyway it's like we're figuring out this stuff in real time as people get get it deployed.

5:02>> I can jump in. I can add my perspective for I actually I think you know sort of on the claim of data being sort of slower or kind of slowish to adapt. I don't think that's an anti pattern like I think that's something that we've seen pretty consistently in the last you know couple of decades is like software

5:18engineering adopts and and data sort of does lag behind that. I think that is true and I think we're seeing the same thing for AI. Um, I think it I agree with Tristan like there's some if you think about like the drivers of that. I think there's two primary things that can help accelerate it. The first is

5:35actually productizing generative AI. It hasn't been productized fully. Um, and there's some foundations that are missing for that. Having semantic layer or some way of understanding it or surfacing it to users. Um, strong security something that's come up a lot more especially uh in recent weeks in recent news. Um so there's a lot of parts of actually what does it mean for

5:57generative AI to be a product and then the second thing just to note is like I think just these thing takes time because of human beings if you think about like the last decade I don't know if anyone remembers digital transformation that was like a big thing once remember uh and if you look at like the last decade all the value in the

6:15economy has been realized because of digital transformation like enterprises finally adopted that are moving to the cloud etc. So generative AI is actually the next decade of gen of of uh value creation not the past decade and so that that shift takes time. So as human beings if we can accelerate that you know I think co 19 and the pandemic did

6:37accelerate digital transformation um I think generative AI has a different pattern to it but again it's uh how quickly can we adopt and change.

6:46>> Yeah I I have a lot to share here. Um, I think going going to this question of like why it felt like it lagged, there's there's a couple big things. I think actually a lot of AI use cases like fundamentally weren't really working in and across software until about a year ago. Like even like AI for coding was

7:04like still pretty primitive. You had like new models come out that made these things more capable and like really unlocked these more agentic patterns.

7:11And so that's one like that just had to like show up and and then I think in data it's a particularly tricky thing because in coding um the the main use case is like you're going to have it generate some code and you know in cursor a product like that like an expert is going to look at it and be

7:28able to understand you know is this right is this wrong. Um and you know if all you want to do is SQL gen or Python gen you know you could kind of adopt a similar pattern. I think the real holy grail everyone wants in data though is to have a large number of non-experts be able to use natural language to do work

7:45>> and unlike even like the vibe coding uh tools like a lovable or a replet or something that are also aimed at non-experts you know and that you can kind of just look at it these are mostly used to generate like static websites you can just sort of look at it and be like is this good but it's much harder

7:58to just sort of eyeball that in data and you you have this high bar for accuracy so you talk about semantic models all of that I will just disagree um quibble with one thing you said Tristan which is I think the like kind of cartoon version of this certainly the version a lot of people jump to when they first saw LLMs

8:14was like oh I'll ask a question like in these sort of like thin BI trivia questions like how much how many widgets did we sell last quarter like that's almost like the kind of cartoon sense of what people wanted and there were a lot of startups that came out with these sort of like AI forBI chatbot things

8:29that were sort of targeted at that I think what's interesting is the real thing that real humans want in reality when using data is they have a lot of follow-up questions and they want to ask things in a more deep way and I think the the more agentic models that have come out in the last year have really

8:45lent themselves to that but you have to be able to have a product that is architected and able to take advantage of that and so the things we see people do in hex with these features which whether they're accessing it via Slack or our UI or anywhere else people will ask a first question and then have like

9:01long threads we've had users go back to threads in our product over weeks asking follow-up questions, going deeper on something, sharing these things, and it's just a lot more complex. And being accurate all the way through a long interaction like that is a much much bigger challenge than like a pretty simple like go retrieve a single measure

9:18and come back with a calculation. >> But that's also an interesting way of doing it just because then you don't have to you have this thread of context, you know, that somebody's been asking about. You don't have to just oneshot the like, hey, this is the this is the query somebody's asking. you kind of have you kind of know what they're

9:34talking about probably the tables they're using etc. Um very I I like that we have some different different opinions on on some of these things. Um so softball question uh how are you incorporating AI into your products? Bar we can start with you.

9:51>> Yeah, happy to. How am I incorporating AI? Um good question. So um I think in

9:58general uh I'll just build on some of what we spoke there about a up until like a year a year and a half ago I think the models were not quite there at least from from our perspective to really deliver value to our customers.

10:11So just for context like we work with data and AI teams and um we help them make sure that their data and AI products are trusted and reliable uh by knowing when issues happen and helping resolve them. Um, and so for us, you know, we started on our journey, you know, probably a couple couple years ago building with AI and it was only, I

10:28think 3.5 came out that this was a turning point when I I remember sort of working with our team and I was like, "Holy like this can actually really be radically different." That was like the big light bulb moment for me.

10:42And so I think what you know when you think more sort of broadly about what our customers are trying to do there's probably two or three big problems that that that are keeping them up at night if you will. The first is they're trying [clears throat] to use AI in their day-to-day to automate the manual work that they're doing. And so that can be

11:00if they're like you know doing manual queries or if they're manually writing rules if they're write manually writing tests. Um there's a lot through the data life cycle that can be automated. So the first thing that we're doing is building agents to help them automate their work.

11:13Um maybe the most powerful example that I've seen is what we call a troubleshooting agent. So for just for a little bit of context, um you know, when someone actually gets sort of an incident or or a data issue is detected, the person who's on the receiving end of that has to go through a lot of to

11:29actually understand what goes on. So, you know, you might see that there's sort of a problem and then you have to start figuring out what the hell went wrong throughout the entire data and AI state. Like, did someone push a coach in somewhere? Did someone just like, you know, fat finger something? There could be a lot of things that can go wrong.

11:45And so, the manual process of doing that is actually um quite tedious for a human being. Interestingly, LLMs can totally mimic the behavior of human beings and dramatically cut down on the amount of time that people do that. So just to give you this you know in really specific terms when a data analyst receives or kind of sees that there's an

12:04issue the first thing that they do is they come with a list come up I mean I used to do this you come up with a list of like 10 hypothesis for what could go wrong like what exactly broke and then you start investigating every single hypothesis and try to eliminate that and that can take weeks or years. um when

12:21you actually use LLMs and deploy basically an LLM that then spawns off an agent for each of these hypothesis, you can very quickly recursively um triage and understand what exactly is a root cause and pinpoint that. And so that is like a in my mind like a dramatically different way of working like really 180. It's probably the the most powerful

12:42example that I've personally seen. And so being able to say, "Hey, what was the root cause of this issue? Okay, got it.

12:48Now what do I need to do I need to backfill the data? Okay, can you help me write code to do that? Etc. very very different way of working. And so that's sort of the first kind of thing that that we're incorporating in AI. Um then the second thing is, you know, I think people are well, they'd like to build

13:03agents. People are building agents everywhere. Um and those agents oftentime can be racist or biased or can have output that just doesn't fit what you were hoping for. And so helping make sure that those agents are actually reliable in production is the other sort of really big thing. um that that we're focused on. And maybe just a last

13:21comment, I think the interesting thing that I've found in all of these sort of um kind of new workflows is that um you know, as an example, our troubleshooting agent, if we just had like slapped that onto like a new product with like nothing other than just like calling it a troubleshooting agent, it brings very little value. The value that we are able

13:42to bring to our customers is because this troubleshooting agent is built on foundations that we've built collecting metadata, data, query logs. There's a lot of sort of rich um data that we can collect about our customers that our troubleshooting agent can use which actually brings all this value. And so I just found, you know, reflecting on that

14:00that um, you know, I think it's a cliche at this point to say that AI is only as good as the data as it runs on it, but actually seeing it in, you know, um, in production is is makes a really big difference. So I think that's always a good reminder for for myself.

14:15>> Colin, I know you've been sort of skeptical about uh, AI AI AI related features, but I have noticed like there is a little AI button in in Omni.

14:24>> I I started deeply skeptical. I think for a lot of the reasons that was just like, you know, it's I'm smarter. Um, but I I think what we found is there's actually it's a little bit actually sort of the inverse. I think we're less focused. We're doing some of the agent stuff, of course, like you know, let it

14:40go solve your business for you. >> I actually think the hardest problem is actually getting it to reliably return really high quality results.

14:47>> So, we're spending a lot of time on just trying to make the basics work really, really well. And like I know that sounds incredibly sort of like boring and trivial, but we found that people were trying to use it to do a lot of the lookup style use cases. And if it doesn't understand fiscal calendars and

15:04that like when a sales rep asks for closed business that they mean closed one business, not just closed business, that it just creates this sort of like indirection in the experience that is just unfriendly. And so we're spending a lot of time really just trying to get the right frameworks so they can answer the basic questions well. And I think

15:21from there the models will essentially let us do the higher order agentic stuff. The other big thing is we've tried to really sprinkle it in all over the product. So I think rather than thinking about AI as like a replacement on the side or some new way of querying, it's like a way that you can add table

15:36calculations. It's a way that you can add filters. It's a way to ask follow-up questions on dashboards. Um there's just a lot of different little places that can be better. It's like a way that you could configure filters on a dashboard.

15:47and it's a little bit more evolutionary versus like a full rebuild of the experience.

15:53>> We had a we had kind of a fortunate accident I would say. Uh you know we started the company not too long before GPT or chat GPT or whatever and the way we architected the product was very principled like at the UI and the data model layer which is around these like cells. So a lot of people I

16:13think think of hex sort of primarily originally as a sort of notebook and we we do a lot more now but the way we do those more things is all still around that cellular model where everything's a cells accept and return data frames and they execute in a reactive graph that's sort of like the tow of hex and what we

16:29say and it turns out that that actual representation is like really really nice for these agentic systems because we basically expose the cells to the model as tools we expose our underlying uh context whether it's schemas or semantic models mod were connected to as context. And there was just something really magical about like as the models actually became agentic and really

16:50worked as Barb was saying like 35 37 44 45 has just gotten better and better like the different cloud models like it's just kind of like you happen to be in the right place at the right time from a product architecture perspective.

17:01And so, you know, not not disagreeing with anything anyone else has said, but like I think there's something very interesting for us of like how you can take the things you were kind of already good at walking into this and merging it while also there are other things you need to rethink. And as we're building new features now, it actually has

17:15changed the way our engineers work. When you're building a new feature, you need to be thinking about like how am I going to represent this as a tool to the agent? How is this new thing that we're integrating with going to be represented as part of a context bundle we're going to provide and be able to do search

17:27across it? And what's our search infrastructure like? So in in some ways

17:33we kind of had this nice tailwind walking into this. In other ways it's like had to really challenge the way we're building certain parts of the product and that's been extremely interesting. Very cool.

17:43>> The um thing I was going to say before you so rudely interrupted. I'm >> so sorry. Please, we're all dying to hear what you have to say. [laughter] >> See if I can keep my train of thought train of thought together. the well so people that build products in analytics have always operated in this like uh

18:02really horizontal way. So uh if if

18:07you're if you even go back to Excel like Excel doesn't know anything about the data that's in it you are supposed to bring all the knowledge and the job of the product builder is just to like build generic tools where you can do whatever you want and in a lot of ways you can think of DBT that way you can

18:26think of text that way any of these like we are here to build kind of computational devices and user interfaces that allow humans to bring intelligence uh and like unlock uh computers to to answer questions. But as we start getting into AI, you find that the job of the product maker is different. The job of the product maker

18:51is to actually go deeper into the epistemology of the questions that are being asked. So you find that you need different context and different tools to answer a lookup question that we talked about before. You need different context and different tools to answer questions that start with why. Um why questions

19:13are super interesting and are historically like a pay grade higher than what questions. Um and and you know whereas like answering a what question will happen you know within 15 minutes or an hour of an analyst's time a why question you reasonably assume could take 3 days or 5 days like that's that's a research project. Um, and so a a lot

19:39of this in AI, you you have to target

19:43these very specific uh user fact patterns and you have to engineer that context for them. So like how do you set up uh a a tool chain to answer a why question? Well, you have to actually uh give it a lot of context

20:03about your business, about like whatever the domain is. And so and and it turns out that like none of our tools by like at ground zero or you know t equals 3 years ago had that type of context. And

20:18um so then the question is like well what is the right form factor to start supplying that? And and it could literally just be like a bunch of text files in in a repo. uh and uh but but

20:30like the way that you structure it and get users to supply that and all of this stuff is like very interesting question set >> AI is changing changing super quickly new models come out people are you know finding things work things things things don't work and I think as a you know as uh somebody who leads a company you have

20:48to decide which you know which bandwagons you're going to jump on which ones you're going to which ones you're going to wait and I think if you want to be at the forefront of kind of some of these some of these things you kind of have to be willing to accept that some of these are going to turn into into

21:02dead ends. And um uh and maybe uh maybe

21:06start with you Barry because I you've been sort of more open than I think other other folks. It's like saying like hey we tried this and it didn't it didn't quite work. So like what are what are some things that like that that you tried that didn't work and what what what lessons have you learned?

21:20>> Tried a lot of things that didn't work. [laughter] That's half the fun. Um [clears throat] I I spent a bunch of time playing with the GPT3 playground API like before CHP

21:31came out and it was like really exciting and interesting. I actually um had been kind of like conditioned to be an ML skeptic earlier in my career. It's like when I was at Palunteer like uh people think it does a lot of ML stuff and it does now but like at that time it was actually like we we became very

21:45skeptical that ML was actually like ready to be applied and so it was a kind of big radicalization and almost like overcorrection where I was like we know this is going to be incredible technology to be able to solve these problems. But it took the better part of two years or or almost two whole years of sort of like playing and tinkering

22:03with this stuff to really figure out how it was going to work. And there were things we built during that period that um have been incredibly useful now that the models have gotten really good. A lot of the search infrastructure we built, the way we've rearchitected certain things. There's also other things we completely threw away. There's a bunch of features we built with the

22:19sort of pre-agentic models that were the I think like pretty good oneshot sort of like call and response type LLM thing like here's a prompt, write me a SQL query stuff that were fine but not not extraordinary. There were cases where we were trying to get models to do things that we were really torturing them into it and was was just wasn't doing it

22:36reliably enough and some stuff we released a lot of stuff we never really released because we were like this isn't good enough it's not at our quality bar.

22:44Um and so I I would actually maybe say the biggest thing we tried that didn't work was the way we organized the team.

22:51And I wrote a blog post about this a couple months ago uh titled like we got rid of our AI team. Um because at the beginning of the year we had our different product teams and then we had a separate AI team. It was called our magic team. Like that was what how we had subbranded all of our AI features.

23:07It's a pretty cool name. Great Hex Magic. It was neat. And um there was there was a lot of cool swag around it.

23:14But walking into the year something just felt like super wrong about it because what was winding up happening is we had product teams building features and then we had the AI people over here that were like figuring out how to like remote control them with LLMs.

23:25And early in the year, uh, we kind of blew that up and we candidly exited some people, but reorganized the company and basically were like, "Hey, like everyone's doing AI stuff now." And we have an AI platform team that focuses on things like search infrastructure, research, our experimentation and um, Eval's harness. But then each of the different persona teams that are focused

23:47on the different types of personas who use hex are fully empowered to prioritize AI features in their road map along with other stuff. And in any given week that team those teams are going to ship vanilla things like an improvement to a you know new chart type or something but also be shipping new agentic features maybe like how our how

24:02we're exposing those charts to agents as our as tools to be able to be used and there was it was not obvious earlier in the year that that was going to be the right move but it's actually been extraordinary. Um the the number of people we have now touching these features is is really high. The analogy I always go back to is like um how many

24:21people here remember Sketch, the product design tool. Okay, so Sketch had a cloud team, right? They were like Figma came along and Sketch is like, "Oh, we should do cloud." And they're like, "Yeah, we do cloud." Like that that those people over there are doing the cloud stuff, right?

24:36And um for those of you who don't remember Sketch, Sketch was like this really amazing, beautiful local Mac app for doing product design. And when Figma came along, it it was almost Figma was like this toy. it was like based in the cloud wasn't nearly as feature complete.

24:51Um, and my my conviction has only grown that you kind of have to adopt a similar mindset in this new era. Like you can't have a separate AI team. It has to be the way the whole company is like thinking about building. And to just extend that analogy like Figma never had a cloud team. It's like everything's

25:05cloud. And unless you're kind of thinking that way, I think there's probably a lot of missed opportunities and um sort of local maximum hill climbing to be had. I also think one of the very similarly AI is not an upgrade.

25:18It's not like you get your base product and you can pay more for AI.

25:21>> Oh, it has to be like you have to target

25:25AI to your broad set of users. >> I had this big argument at my board my board meeting like a year year and a half maybe like a year and a half ago where there was a lot of SAS companies doing AI upgrades. We've all seen these, right? It was like Slack was trying to sell you an AI upgrade. Loom is trying

25:38to sell you an AI upgrade. Notion was trying to sell you an AI upgrade. there wasn't a SAS tool you use that wasn't trying to like add SAS name AI and like an add-on. And I always felt like that was so stupid. I actually wrote a blog post like we're we're not charging for an AI add-on because I was like that

25:52implies that there's a version of the product that is non AI that you want to continue selling. It just became really obvious to us that the best way the only version of Hex we want to sell is one with AI features turned on because they're so revolutionary. It's completely changed the way we and our customers use the product. And so I

26:07think I think I just think you have to like approach it that way and and kind of go all in. And I I don't think that would have worked two years ago because the models weren't ready yet. But I think the techn is really working and um you kind of have to adopt that mindset both on like the way you monetize it,

26:20the way you organize the team, the way you're talking to customers about it. That's my opinion.

26:25>> Yeah, we similarly had an argument about a button in the product. Should it say troubleshoot with AI or just troubleshoot? And there was like so much heat about that. And you know, >> is it like Monte Carlo AI? Is it just Monte Carlo? This is how Monte Carlo works.

26:37>> Exactly. And actually the thing what convinced sort of us or we at the end was really our customers like for us is I don't know if this is a hot take but um you know we started data observability working with data teams and then suddenly everyone changed their LinkedIn to be from data governance now it's data and AI governance and if

26:57before you were a data engineer now you're a data and AI engineer and like suddenly all of our customers turned from chief data officer to chief data and AI officer and so you know as a company we had to grow and expand with that right and so I think what we've learned from that is first of all I

27:13agree with you in product building yeah like I think it's sort of table stakes for AI to be everywhere that's that's definitely part of it but also in the mindset um but also for the way that our customers think about it so I think the most sort of forward thinking customers view data and AI as one system and one

27:31estate and they don't think of it as two separate teams or two separate organizations there's certainly like a lot different teams building AI whether it's like software engineers or data engineers or AI that's a whole other like fight that we can do around like who should be building with AI um but by and large like I do think those that are

27:48thinking about data and AI estate as one is actually those that are finding the most traction with their solutions if you keep on trying to bifurcating the two and having separate teams building here and separate teams building here like you just end up clashing or or building redundancies and such. So, um, yeah, hot take data and AI is one and

28:05the same. >> Yeah, I think ours is actually a little bit different. Um, like we're obviously building a BI tool. Um, and as we build out a bunch of the AI stuff, the goal is the goal at the beginning was sort of like we'll make it magic and it will just solve all of your problems. And so,

28:22like every time there was a quality issue, return your result set, we were like, okay, how do we make the the system prompts better so that it solves all the problems? And I think we really hard inflected obviously like we're also a semantic layer. We were just like let the user put context everywhere. So like you know if it's not choosing the right

28:38date put in a comment that says use this date by default. And it sounds so trivial but uh I'm also sort of the data team at our company. And one day a week I just go in and actually look at the AI logs and I look at queries that people are writing. And again like this sounds so naive as like the state-of-the-art

28:56for tuning the system but I look at the queries. I look at the result set sometimes and then I add a little bit of context that tries to make it better.

29:03>> It's vibes vibes based eval. >> It literally and it's like it's everything that a statistician would tell you not to do because it's like systematic overtuning.

29:11But systematic overtuning appears to be how to add like the best context for some of these use cases.

29:16>> And it was one of these sort of full inflections that's like instead of trying to evaluate your system statistically and sort of make it perfect, just like give end users hooks to do things. And it turns out when they just say what the system can do, it can actually do what it's supposed to do.

29:29But it's hard to figure out whether that's actually the future because it seems so naive also and like less magic.

29:37Well, >> there's a big debate about evals. I mean to that point like I think the first generation of these tools and we certainly did. We built this like really we had like thousands of very like clear evals of like when the user has this prompt this is the query and this is the result set you should get. These agentic

29:52systems are just very hard to write evals for. So there's a big debate just like in the AI engineering world about the role eval can play and all of that.

29:58And I think it's almost like it's come back around to maybe more of like the vibes based like just >> a then b testing >> tell it more stuff and you know we'll see where it goes.

30:06>> Um I think a lot of people are thinking that you know like or saying [clears throat] that this would be a bad time to like start as a software engineer and like you know if I was going back to school I wouldn't I wouldn't study software engineering because AI is going to mean that that doesn't you know you don't need software

30:21engineers. I don't necessarily believe that that's that that's that's quite true. But um what about what about for data? Like do you think that as a you know would you have advice for kind of early career folks that you know would you go into would you go into data?

30:37Would you become an analytics engineer or a data a data engineer? Um maybe start with Tristan since you're sort of the kind of the the creator of the analytics engineer and the sort of the statesman of the uh the modern data >> world.

30:51>> All right. [laughter] I put you on the spot. I'm >> not going to comment on any of that.

30:55>> I like Thank you for not saying elder statesman, by the [laughter] way. It's very >> He's got He's got hair and I don't, so I can't really >> uh I I This is a whole episode of the Cognitive Revolution podcast like by itself. Um I

31:14I don't buy that. I don't buy the argument that it's a bad time to get into software engineering. I don't buy that it's a bad time to get into analytics or or data engineering. Um the

31:27[snorts] the idea that we are going to run out of ways to apply intelligence to the problem sets that we work on all the time uh seems seems a little silly. And

31:39um e even if you simply context you

31:43simply like uh conceptualize yourself as a uh a way to interpret the real world

31:51and supply it as context to agents like I mean that that that could be a way that we add value in five years. Like that that is a a real version of the the multiverse. Um, but that's still incredibly valuable. Like, uh, LLMs have no way of interacting with the real world and like you have this massive

32:13conceptual model. You have a, uh, of like the real world and the business problems that you are trying to solve and those uh that translation does in fact need to happen. Um so so it just it

32:28I think is a it is an extrapolation of a line that misses if if you assume that there's no role in the future for humans in either software engineering or the whole set of data uh professions. um you you're just

32:47not fully understanding what the value is that you bring every day and the like bumps along the road that need to happen to like you being obiated like that that's just not I mean in the grand scheme of our lifetimes if that's 50 plus years like okay who the hell knows but like over the next 5 to 10 years

33:06like I don't I don't think that's a real thing. I I think in every profession you kind of have this like distribution of people. It's like probably approaches like some normal distribution where you have like the really good people and then you kind of have this long tale of people who are doing maybe lower value stuff. So in software engineering I'm

33:22sure a lot of us have worked with people you'd kind of call the the mythical 10x engineer. You know the person who's thinking about systems and architecture and driving forward new ideas. The type of things it's like very hard to imagine LLM doing today or anytime soon. You also have a long tail of folks who maybe are more junior or just, you know, not

33:39as skilled that are sort of just picking up tickets and implementing buttons and all of that. I think that in data you kind of have the same thing. You've got people who are like really deep, incredibly thoughtful data scientists, data analysts who are bring a ton of perspective and statistical rigor to a problem. Um, where we can all look at

33:55that and say, "Hey, there's a lot this person does, whether it's the actual work they're doing, the way they're interacting with the rest of the or that's hard to replicate for an agent." It's also a long tale of people who are doing lower value work. I mean, there's a lot of people with data in their job title that are picking up a ticket to go

34:09add a chart to a dashboard somewhere. And I think that the question that's kind of I I don't actually know the answer to that's interesting is like will AI make it so that long tale of people can like elevate their game and and elevate their work and go and do better and bigger things or does it lop

34:23off the long tail and those people need to go find other jobs? I I don't quite know the answer to that, but I do think the answer is probably lives somewhere in examining what the really good people in any given profession do and thinking about like how you can help not just those people do a better job, but

34:38actually help more people like work to that level of of quality. Um, that's something I've been turning over a bunch and I think it's true in probably every domain, design, film, you know, everywhere where AI is actually being applied now. It's like that strikes me as the kind of fundamental question.

34:53It's like what happens with the long tail? You have just said a lot of really smart things, but you at the very beginning of that you said the words normal distribution and then you drew a power law [laughter] >> and you just I switch my distribution quick because you think about [laughter] it's more like the you know the we can

35:11get into it. Yeah. >> I [laughter] >> I'll add to that. I think like again 10 years ago everything was mobile first.

35:19there were mobile startups, mobile engineers, everything was mobile. But and sure, now everything is AI, but I think that also will will norm normalize and yes, our jobs will will change, but we will all we we're all mobile and we're all going to be AI. I think that's all like part of it. Um maybe going back to your question, it's funny. I feel

35:37like on panels I always get asked like what advice would you give your kids or you know da and I'm like first of all if you have kids you know that they never listen to your advice. So if anyone can help me answer that question let me know. Um, I think that's the the harder question with our kids. But no, I mean,

35:52I would say like I think this is the best time to work in AI and data because

35:58the best thing that you can do for your life and your job and your career is to pursue something that's dynamic and changing. This is arguably the most interesting time in the history of tech, the most dynamic time in in the history of tech. It's the time when opportunities get created and it's a time for you to realize yourself in

36:15whatever way and whatever impact you want to make in the world. So I would argue this is a time to lean in, not to lean out.

36:21>> Yeah. I I mean I think we this is what we want as society. We don't like we used to have to go input the PDF by hand into the computer and now we can scan it and it goes in the computer and like yes the PDF inputter's job is not there anymore. The whole point like the reason

36:37that probably all of us are doing data analytics at some level is to make people more efficient and make better decisions and unlock them to do the good work. And all of these things are just examples that unlock people to do higher value things. Like we don't want to spend our time doing entry or cleaning up or checking whether things are broken

36:56or I don't know what I would say data analytics is like visualizing. Um the idea is like jobs will always change and certain ones at the very margin like I don't know like industrial revolution type jobs like yes some will disappear but I think ultimately like I've gotten this question about SDRs over and over again. And it's like if an SDR can do 10

37:16times as many meetings I don't want fewer of them. I want more of them because like we're we're hiring people because they create ROI for the business. And so like yes the mix of your engineers is going to change a little bit. the mix of your data team is going to change a little bit, but if they can do 10 times as much work, like

37:32we want more of those people, not fewer of them. >> It's like it's like the Jebans paradox.

37:36>> Yeah. >> Yeah. It's like you don't [clears throat] >> I I don't know about I'm sure it's true for all of us. Like software is easier to build now because you have AI. It doesn't mean we need we're like less engineers. In fact, I'm trying to hire like way more engineers because they can be way more productive.

37:48>> Exactly. >> Like we're just going to get a lot more software. >> So it sounds like everybody everybody Oh, go ahead, Tristan. But it it that's kind of why I started off with a like does anybody believe that the amount of critical thinking that we need in the future is like going to go down. But it

38:00it is fascinating because it shows up in different industries or businesses in different ways.

38:05>> If you are a company that looks as it looks at the future as being much bigger, greater than the past, you're in a growth mindset. You are trying to do you're trying to build more software, have more intelligence, all of this stuff. And if you are uh in a

38:21steadystate business, you might actually look at this as a like an ability to do the same with less. Um and so it it does show up in different ways, but the future is always built by growth companies and not steady state.

38:34>> I think that's really well said. I think at times like this that that what we'll see looking back in 10 years is the difference between the companies who embrace this as a way to do more and the companies who embrace this as a way to like do less um or have less people.

38:47And I I think that'll be the Yeah, >> I think we have time for just one last like rapid fire uh rapid fire questions because it sounds like everybody agrees that you know data jobs are not going away. But what's like just quickly one one thing that you think is going to change about the way people do their

39:06jobs in in data in the next couple years? We can go. >> I think people are going to use AI more.

39:12That's the worst answer possible. It's just like I don't know. I I think it'll look a lot the same and a little bit different.

39:21>> Uh I think the user interfaces the UI will change dramatically. Like the way we we interact with solutions now is is going to be very very different. I don't know what that is like MCP, natural language, whatever it is, but like all of our UIs are going to have to dramatically change.

39:37>> Uh curiosity, flexibility are going to be the highest value skills. uh if you say that this is not the way that I learned to do it, then you're effed.

39:48Yeah, I I just think the the last 20 years there's been like anyone who's been in data has just heard data democratization and it's been kind of like you know we're going to spend all this time and energy bringing our data together and we put dashboards on top and um there's always this promise that got sold that like you'd have everyone

40:04in the organization be able to like access data and answer questions and that never really happened like you know you go talk to companies like do you really feel data driven and I think now that it's just the the friction to just ask a question and get an answer is like so much lower. It's like 10 100 times

40:19lower. You're just going to see an amazing expansion in the number of people who are engaging in data work, asking data questions, thinking critically with data, and it's going to be amazing. So, really excited about that.

40:31>> Awesome. Well, thanks so much. Uh really appreciate you're taking the time to to chat with us all.

40:35>> Thank you. [applause] [music]